The parallel computing community have been using clusters of commodity workstations as an alternative to expensive massively parallel processors (MPP) for several years. While MPPs can rely on custom hardware to achieve high performance, their development follows a slow pace, mainly due to the small production scale. Clusters, in the other hand, benefit from the frenetic pace with which the workstation market evolves. As a matter of fact, when an MPP comes to the market, it is very likely that processor, memory and interconnection systems with similar features will already be available at the commodity market. In this way, it seems evident that both technologies are going to converge into a single one.

However, when we compare the performance of parallel applications running on clusters and on MPPs, the figures show a quite different scenario: clusters are still far behind their expensive relatives. The Numerical Aerospace Simulation Facility from NASA has carried out a careful study on parallel computing performance. This study, better known as NAS Parallel Benchmark, corroborates the superiority of MPPs.

Taking in consideration these two observations, we concluded that the gap between MPPs and clusters has its origin in the parallel programming software environment normally used in clusters. While MPPs rely on custom software specifically developed to support parallel applications on a given parallel architecture, clusters often apply the ``commodity'' principle also to the software. Commodity workstation software, however, has not been designed to support parallel computing.

In this project, we intend to develop a comprehensive programming environment to support parallel computing on clusters of commodity workstations. This software environment shall include a parallel language and a run-time system to support the development of high performance parallel applications. In order to enable existing application to run on the proposed environment, it shall also support traditional standards from the parallel community, such as POSIX and MPI. Visual tools to configure and manage the environment shall also be considered.

The proposed run-time support system will be responsible for making cluster resources available to parallel applications with the lowest possible overhead. These resources include, amongst others, processor time, storage area and communication channels. Since each (class of) parallel application has particular demands regarding system resources, the run-time support system must be designed in such a way that reconfigurations are possible and easy to be carried out. A final run-time system for a given application shall only include components that will actually be used. Object-orientation and component engineering can help in developing this kind of system, however, automatically selecting and assembling components is still a challenge that shall be considered in this project. Besides being highly configurable, the run-time support system shall support parallel operations, such as group communication, collective operations and so on.

Many existing parallel applications have been written considering international standard interfaces. Independently from the development methodology and the programming environment adopted, most of these applications rely, in their lowest levels, on some subset of POSIX system calls to access local resources, and on some inter-node communication package, typically the Message Passing Interface (MPI). Thereafter, supporting these two interfaces in our run-time support system may bring several existing parallel applications to run in our clusters, and, since only the interfaces are going to be supported (not their traditional implementations), these applications shall run more efficiently in the new environment. Moreover, a version of the run-time system shall run at guest level on UNIX, either to support applications with deep UNIX dependencies, or for debugging.

Writing parallel applications using standard sequential languages enriched with communication libraries is not always adequate. Adopting a parallel language eases the application development at the same time it increases performance due to better resource utilization. Moreover, some optimizations are only possible when the compiler is aware of application parallelism. Thus, our environment will include a parallel programming language, offering a suitable mechanism to explore both fine and medium-grain concurrence. To reduce the impact of a new language, our parallel language shall be a C++ extension. Besides being widely used, C++ is object-oriented, what opens way for distributed, parallel objects.

One of the most serious problems in a cluster environment is to keep all the paraphernalia working. Noise, heat and a confusing set of wires are normal when ``commodity'' is involved. Since avoiding this is not possible, we will work to make this ``chaos'' manageable. Configuring the run-time support system on each node, reconfiguring it on the fly for each new parallel application, monitoring nodes' operational condition , balancing resource utilization are just some of the tasks that shall be carried out by our management tools. These tools shall implement a central management console with a friendly graphical interface.

A parallel programming environment is of no value if parallel applications cannot benefit of it. In order to validate our environment, we intend to port and develop real parallel applications to run on it. Both of our industrial partners shall collaborate intensively during the requirement analysis phase of our platform development, in such a way as to grant the target platform will find its use for real applications. Both industry partners, Pure-Systems GmbH and Altus Systems Ltda, will explore the potentialities of our platform in the area of high performance embedded systems, which may include, for example, control of complex industrial processes.

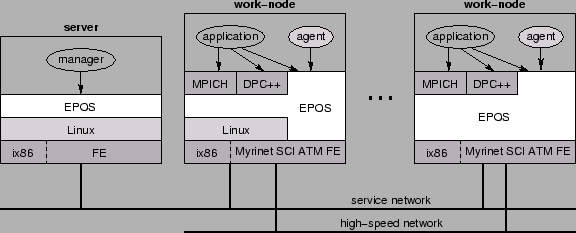

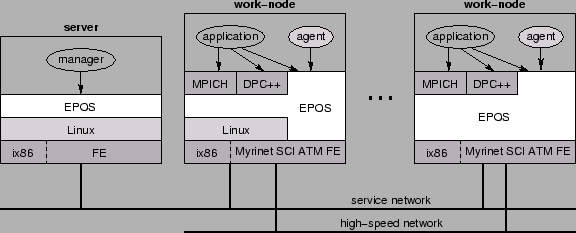

An overview of the proposed environment is shown in figure 1. Our current clusters are organized so that all nodes in the cluster are connected by a service network (currently FAST-ETHERNET) and the work-nodes are interconnected also via a high-speed network (currently one of MYRINET, SCI, ATM or GIGA-ETHERNET). One special node, the server node, runs the set of management tools and supports the work-nodes via the service network.

A parallel application running on the work-nodes will have access to three interfaces: DPC++, MPI and EPOS. EPOS may be present as a native operating system or may be running at UNIX guest level. Besides processes from the parallel application, work-nodes may also run management agents.